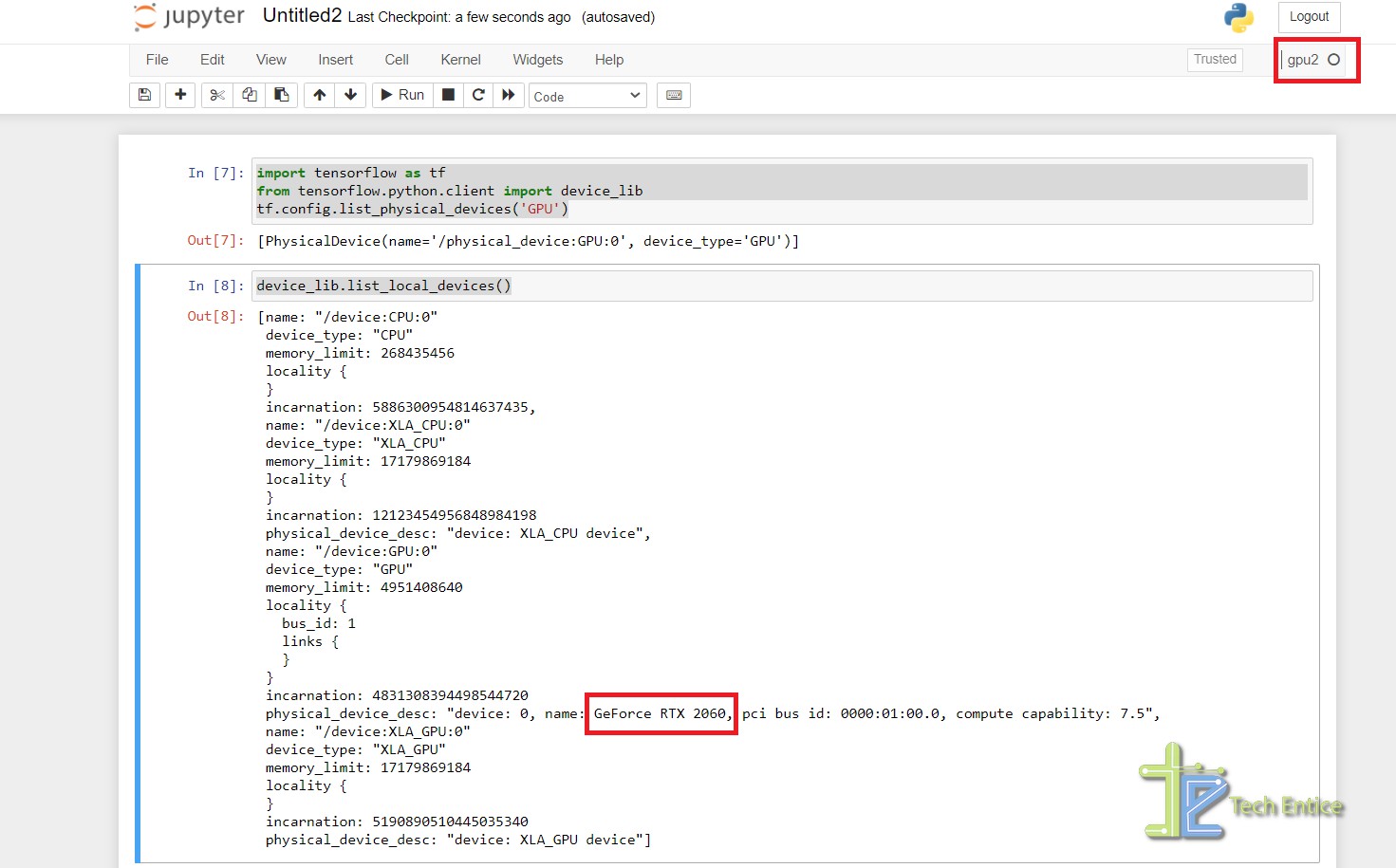

tensorflow - Why do I get an OOM error although my model is not that large? - Data Science Stack Exchange

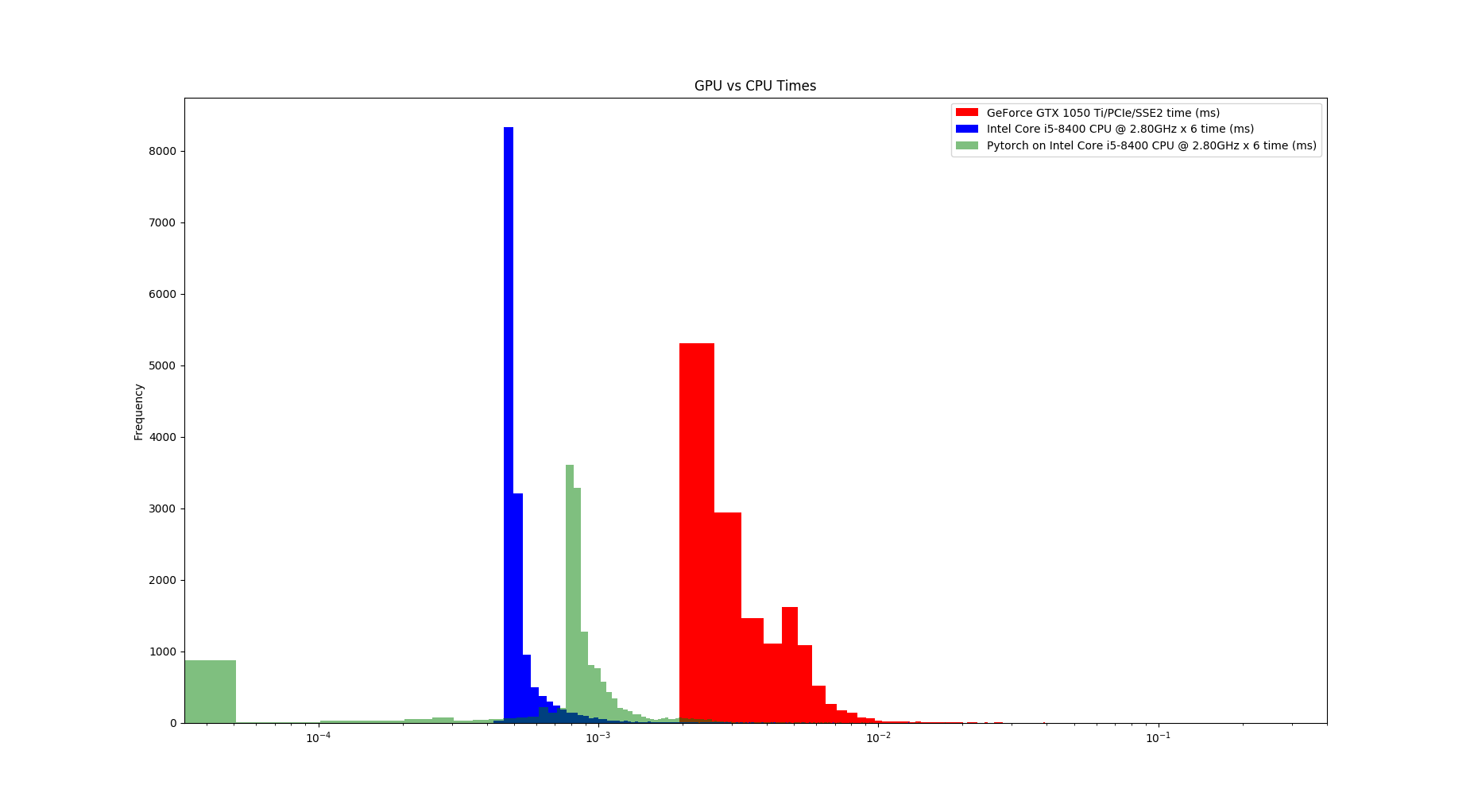

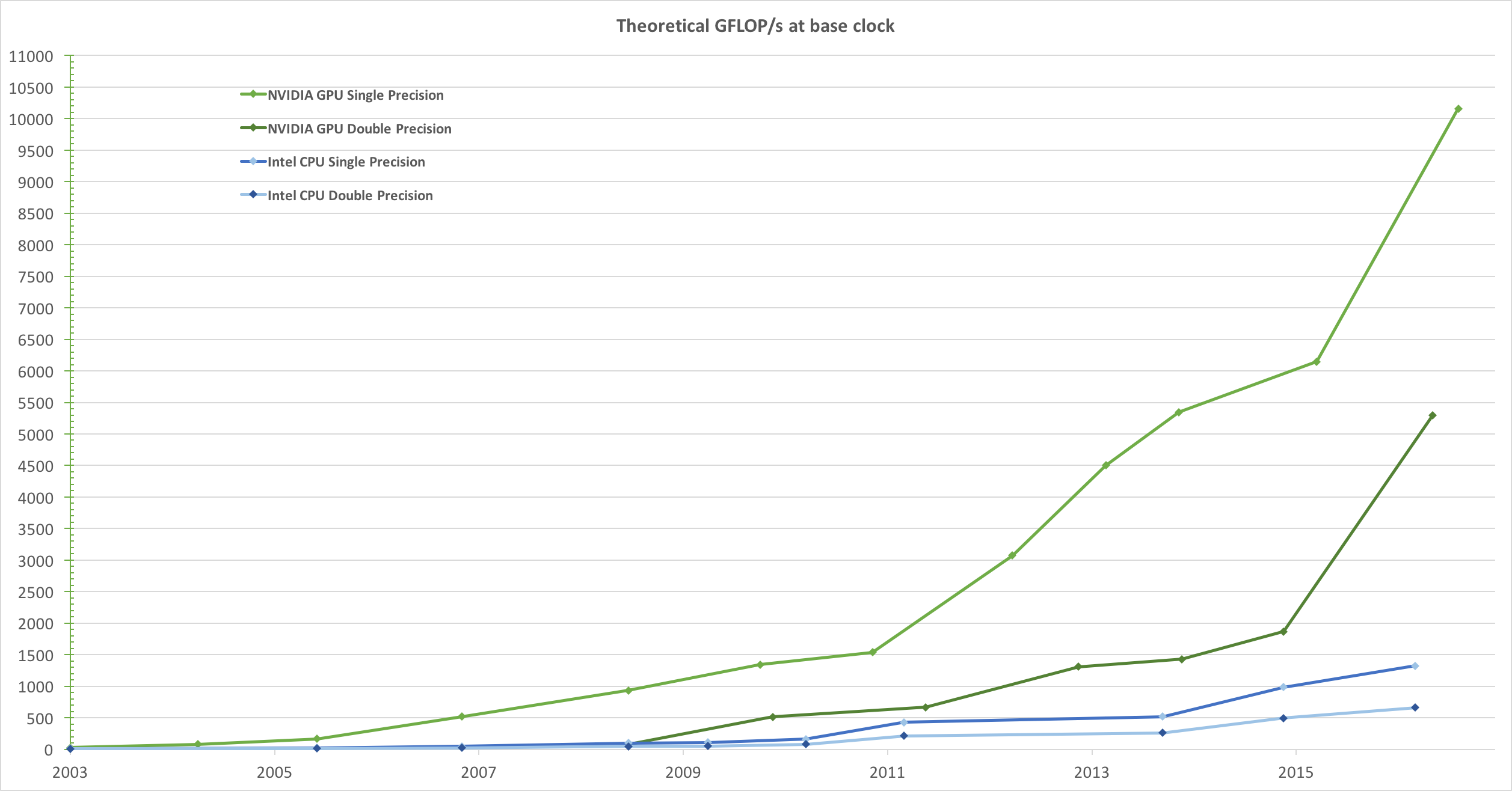

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

Hands-On GPU Programming with Python and CUDA: Explore high-performance parallel computing with CUDA: Tuomanen, Dr. Brian: 9781788993913: Books - Amazon

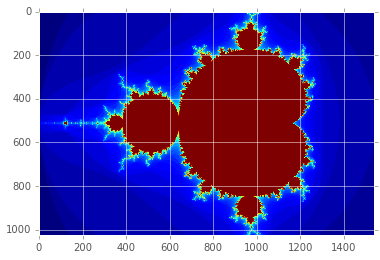

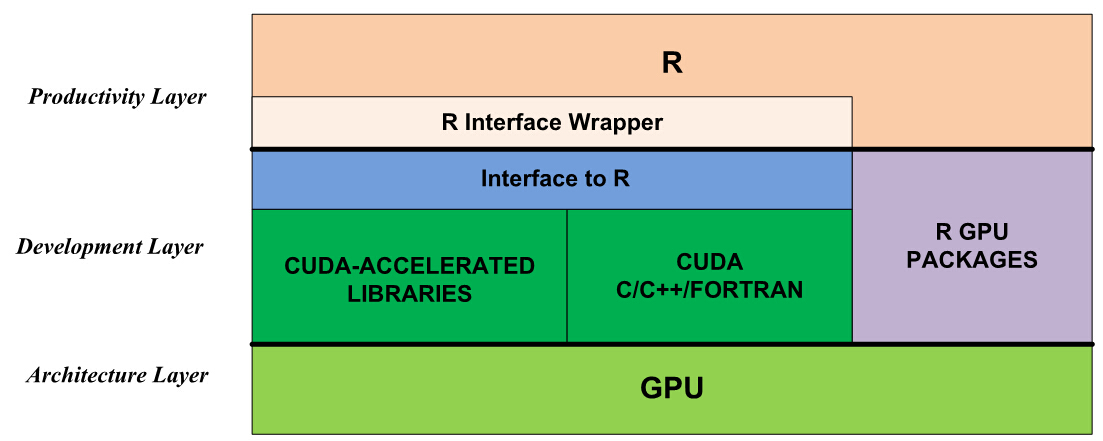

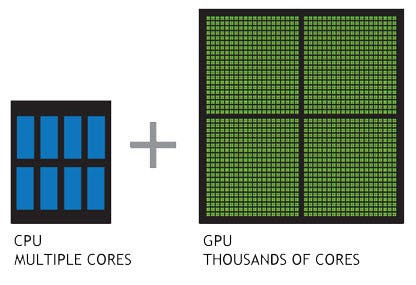

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

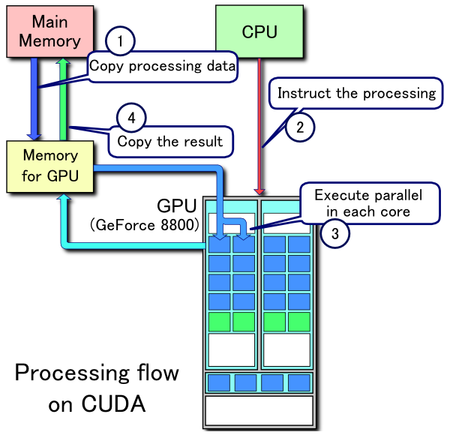

A Complete Introduction to GPU Programming With Practical Examples in CUDA and Python - Cherry Servers